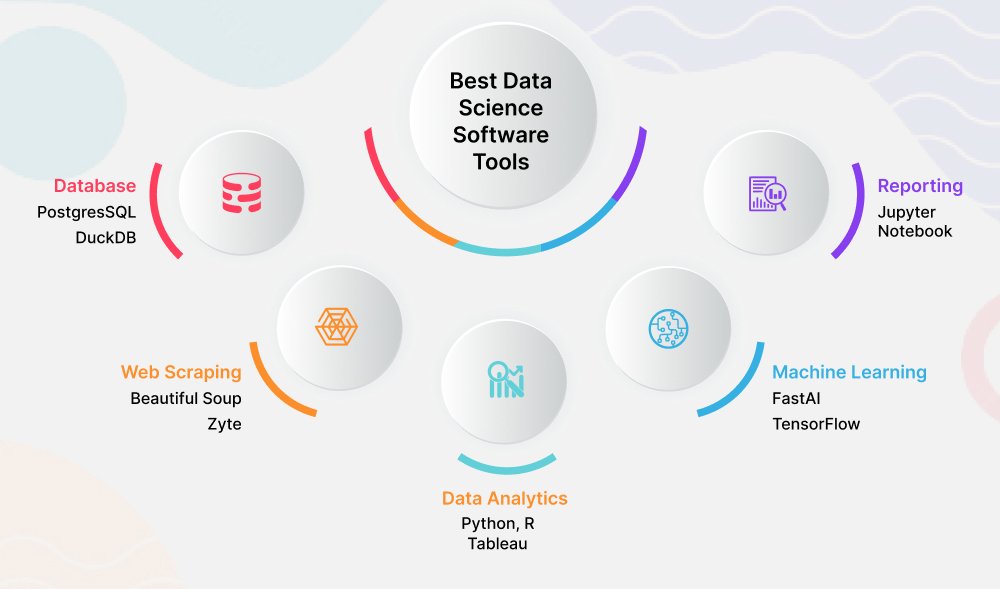

Best Tools for Data Science

Data scientists use devices, software, or platforms referred to as “tools” to perform various tasks associated with the data science process, including collecting, organizing, analyzing, modeling, deploying, and cleaning data. These technologies are designed to handle large volumes of data effectively, enabling data scientists to make fact-based inferences and judgments.

The most popular data science tools include Tableau, R, Python, Hadoop, and SQL. Data scientists can choose the best tool for the work at hand because each tool has unique strengths and capabilities. Thanks to technology advancements, new data science tools are regularly being developed to meet the changing needs of data scientists in a data landscape that is always changing.

This is an extensive library of data science

1. Apache Nifi is an open-

source program for automating data distribution and intake amongst systems.

2. Apache Kafka:

A distributed event streaming framework that enables the development of real-time data pipelines and streaming applications.

3. Apache Flume:

Using distributed log collection and aggregation, this system effectively collects, aggregates, and transports enormous volumes of log data.

4. Apache Airflow:

This platform allows programmatic process authoring, scheduling, and monitoring, enabling complex data pipelines.

5. Pandas:

This Python library for data manipulation and analysis offers functions and data structures for organizing, modifying, and analyzing data.

OpenRefine is a data transformation and cleaning tool that lets users look at, adjust, and balance data inconsistencies.

7. Trifacta Wrangler:

This interactive data preparation tool speeds up data transformation and cleaning with an easy-to-use interface.

Analyzing and Investigating Data:

1. R is a statistical computing and graphics programming language and environment that provides a large number of packages for data analysis and visualization.

2. Python:

A flexible programming language for scientific, numerical, and data visualization applications, with libraries like NumPy, SciPy, and Matplotlib.

The third tool is Jupyter Notebook/JupyterLab, an open-source web tool for making and sharing documents with narrative prose, equations, live code, and visualizations.

4. Tableau

A powerful data visualization tool that lets users create dynamic dashboards and reports for sharing and evaluating insights gleaned from data.

5. Information Studio Google:

With this free tool, you can generate interactive dashboards and reports using data from several sources, such as BigQuery, Google Sheets, and Analytics.

1. Learn with Scikit-Learn:

Easy-to-use tools for data mining and analysis, including supervised and unsupervised learning techniques, are provided by this Python machine learning toolbox.

2. Google created the open-source machine learning framework TensorFlow, which is used to create and train deep learning models including neural networks and deep learning algorithms.

3. Torch:

This deep learning framework, developed by Facebook’s AI Research Lab, offers tensors and dynamic computational graphs to help in deep learning model building and training.

4. XGBoost:

This optimized distributed gradient boosting toolkit provides portable, scalable, and accurate gradient boosting technique implementations.

5. H2O.ai:

An open-source platform that can be used to create and deploy machine learning models at scale, offering techniques for regression, classification, anomaly detection, and clustering.

Distributed computing with big data:

1. Apache Spark is a single analytics engine for managing enormous volumes of data and learning, with APIs for batch, stream, SQL, and machine learning.

2. Hadoop

an open-source system that distributes the processing and storing of large datasets among computer clusters using simple programming techniques.

3. Information Bricks:

An automated workflow, job, and collaborative notebook platform built on top of Apache Spark for data science, data engineering, and machine learning in the cloud.

Data Reporting and Visualization:

1. Plotly:

A Python graphing library that is interactive and can generate publishable dashboards and graphs.

2. Seaborn:

a Python visualization toolkit based on Matplotlib for statistical data visualization, providing high-level APIs for producing aesthetically pleasing and instructive statistical representations.

3. Altair

This Python declarative statistical visualization tool generates interactive visuals from declarative specifications.

4. Power BI by Microsoft:

In addition to interactive visualizations, this business analytics solution provides business intelligence tools for creating dashboards and reports.

5. Systems of business intelligence Users can generate interactive dashboards and reports from multiple data sources using QlikView and Qlik Sense.

Data warehousing and SQL:

1. Snowflake: A cloud-based data warehousing platform that enables users to store and handle enormous volumes of semi-structured and structured data.

2. BigQuery on Google:

a fully managed data warehouse that enables fast SQL query execution on large datasets and offers real-time analytics and machine learning capabilities.

3. Redshift via Amazon:

Customers can evaluate data with this fully managed data warehouse system by running standard SQL queries.

1. Apache Zeppelin is another:

An online notebook for data analytics and visualization that supports multiple computer languages, including as Markdown, Scala, Python, and SQL.

2. TIME:

This open-source platform for data analytics, reporting, and integration offers visual processes for data pretreatment, analysis, and visualization.

3. RapidMiner:

An open-source data science and machine learning platform featuring a visual workflow designer and tools for data pretreatment, modeling, and assessment.

These are just a few of the many data science tools available, each with unique capabilities and features that cater to different aspects of the data science workflow. To rapidly analyze data and extract relevant information, data scientists often combine several technologies, depending on their individual goals, preferences, and degrees of experience.